PromptLayer

About PromptLayer

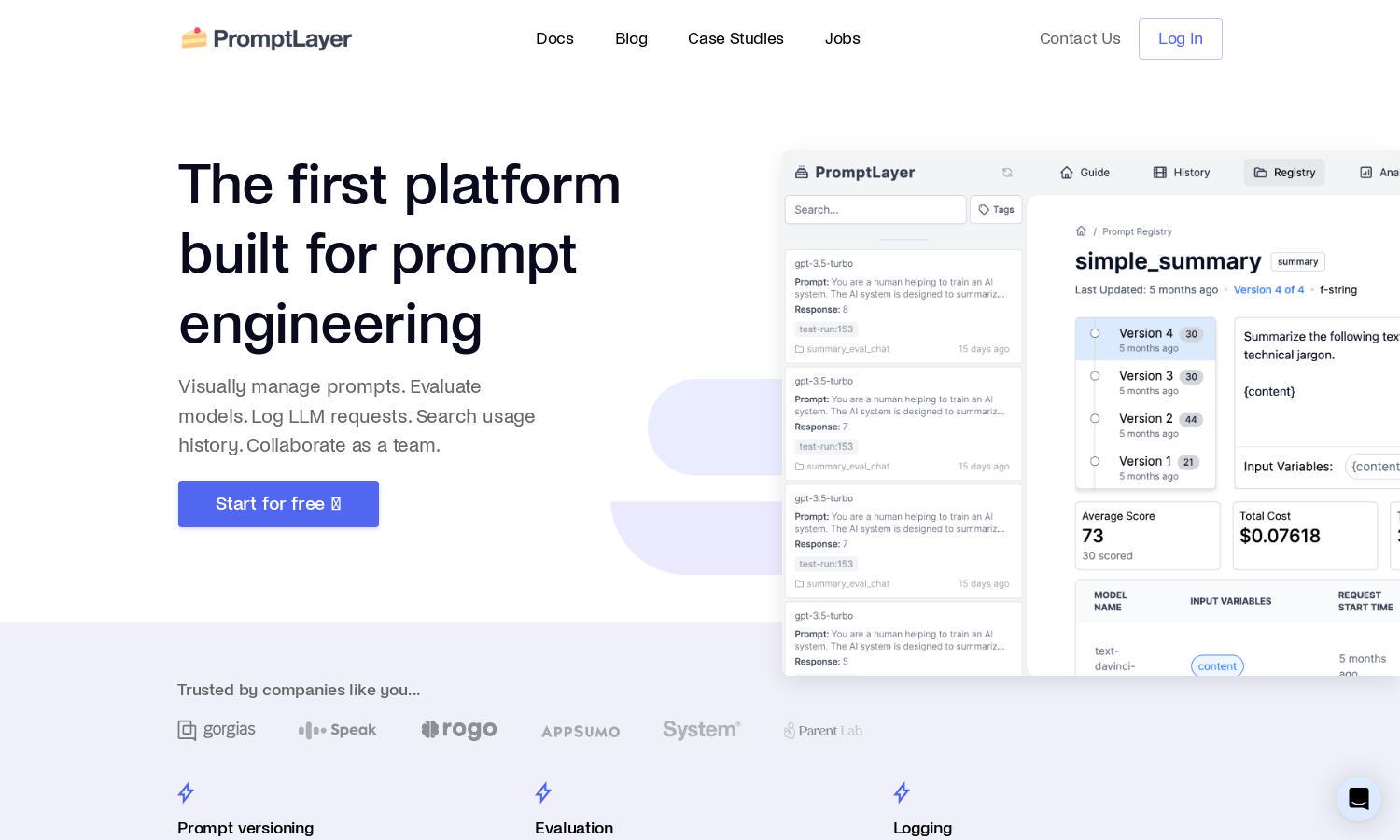

PromptLayer revolutionizes prompt engineering for AI applications, targeting developers and non-technical stakeholders. With visually engaging tools, it simplifies prompt management and evaluations. Users can collaborate effortlessly, analyze performance metrics, and conduct regression tests to refine AI prompts and improve product outcomes.

PromptLayer features flexible pricing plans, offering a free tier for startups and cost-effective subscriptions for larger teams. Upgrading provides enhanced capabilities, including advanced analytics, prompt management features, and collaboration tools, empowering users to efficiently iterate and improve AI-driven applications.

PromptLayer boasts a user-friendly interface with intuitive navigation and visually appealing layouts. It promotes a seamless experience for users, allowing them easily to manage prompts, collaborate with teams, and analyze data. This innovative design enhances user engagement and accelerates prompt development.

How PromptLayer works

Users begin by signing up for PromptLayer and are guided through an intuitive onboarding process. From there, they can easily navigate the dashboard to manage and edit prompts, conduct evaluations, and collaborate with team members. The platform’s streamlined workflow allows both technical and non-technical users to engage effectively.

Key Features for PromptLayer

Collaboration Tools

PromptLayer's collaboration tools empower non-technical teams to iterate on AI prompts, minimizing engineering bottlenecks. This innovative feature enables users to directly edit and test prompts through the platform's dashboard, facilitating faster feedback loops and smarter AI solutions.

Prompt Registry

The Prompt Registry is a unique feature of PromptLayer, allowing users to visually manage and version control prompts. This organized system ensures teams can easily track changes, conduct A/B tests, and maintain prompt quality, significantly improving the prompt development process.

LLM Observability

PromptLayer's LLM observability feature allows users to monitor usage and performance metrics. By analyzing logs and identifying edge cases, users can optimize prompts efficiently, ensuring their AI applications perform at peak effectiveness and user satisfaction remains high.

You may also like: