Agenta

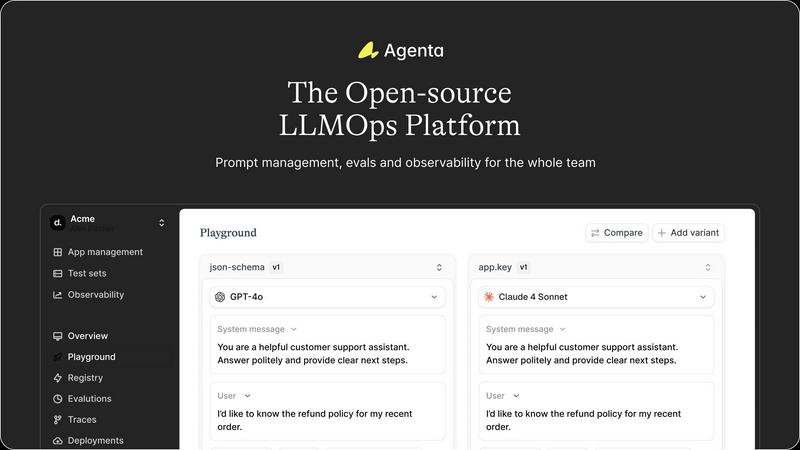

Agenta is the open-source LLMOps platform for building reliable AI applications together.

Visit

About Agenta

Agenta is the definitive open-source LLMOps platform engineered for sophisticated AI teams dedicated to building and shipping reliable, production-grade LLM applications. It addresses the fundamental chaos inherent in modern LLM development, where prompts are scattered across disparate tools, teams operate in silos, and deployment often proceeds without rigorous validation. Agenta transforms this fragmented workflow into a structured, collaborative, and evidence-driven process. It serves as the single source of truth where developers, product managers, and domain experts converge to experiment with prompts and models, run systematic evaluations, and debug issues with precision. By centralizing the entire LLM development lifecycle—from initial experimentation and automated testing to comprehensive production observability—Agenta empowers organizations to replace guesswork with governance, enabling them to ship AI products that are both innovative and intrinsically reliable.

Features of Agenta

Unified Experimentation Playground

Agenta provides a centralized, model-agnostic playground where teams can seamlessly iterate and compare prompts, parameters, and models from any provider side-by-side. This eliminates the friction of toggling between different vendor consoles and internal documents. Every iteration is automatically versioned, creating a complete audit trail of changes. Crucially, you can debug with real production data, allowing you to recreate and fix issues using actual user inputs and model behaviors, bridging the gap between development and live environments.

Automated and Integrated Evaluation Framework

Move beyond manual "vibe checks" with Agenta's robust evaluation system. It enables the creation of a systematic, automated process to validate every change before deployment. The platform supports a flexible mix of evaluation methods, including LLM-as-a-judge, a suite of built-in metrics, and custom code evaluators. You can evaluate not just final outputs but the entire reasoning trace of complex agents, ensuring each intermediate step meets quality standards. This framework brings empirical evidence to the forefront of decision-making.

Comprehensive Production Observability

Gain deep visibility into your live LLM applications with full request tracing. Agenta allows you to pinpoint the exact failure points in complex chains and agentic workflows. Any problematic trace can be instantly converted into a test case with a single click, closing the feedback loop between observation and experimentation. Furthermore, you can monitor system performance in real-time with live evaluations, proactively detecting regressions and ensuring consistent quality for end-users.

Cross-Functional Collaboration Hub

Agenta breaks down silos by providing tailored interfaces for every team member. Domain experts and product managers can safely experiment with prompts and run evaluations through an intuitive UI without writing code. Developers maintain full control via a comprehensive API, with complete parity between programmatic and UI workflows. This creates a unified hub where technical and non-technical stakeholders can collaboratively experiment, compare results, and make data-driven decisions together.

Use Cases of Agenta

Enterprise AI Product Development

Large organizations developing customer-facing LLM applications, such as chatbots or content generation tools, use Agenta to establish a governed, multi-team workflow. It ensures prompts are systematically managed, all changes are validated against a comprehensive test suite, and production issues can be rapidly diagnosed and turned into regression tests, guaranteeing reliability at scale.

AI Startup Rapid Iteration

Fast-moving startups leveraging LLMs for their core product benefit from Agenta's integrated experimentation and evaluation cycle. It enables small teams to iterate on prompts and models with speed and confidence, using automated evaluations to measure the true impact of each experiment. This accelerates the path to product-market fit while maintaining a foundation of quality.

Complex Agentic System Debugging

Teams building sophisticated multi-step AI agents and workflows utilize Agenta's observability to debug elusive failures. By tracing every step and examining intermediate reasoning, developers can isolate the specific point of failure in a chain. The ability to save any trace as a test case is invaluable for building robust test sets that cover edge cases and complex scenarios.

Regulatory Compliance and Audit Preparation

In regulated industries, Agenta provides the necessary version control, audit trails, and validation evidence for LLM systems. Teams can demonstrate a controlled development process, show how prompts were tested and evaluated, and provide a complete history of changes, which is critical for meeting compliance and governance requirements.

Frequently Asked Questions

Is Agenta truly model and framework agnostic?

Yes, absolutely. Agenta is designed with flexibility as a core principle. It seamlessly integrates with any major LLM provider (OpenAI, Anthropic, Cohere, etc.) and popular development frameworks like LangChain and LlamaIndex. This prevents vendor lock-in and allows your team to use the best model for each specific task, all within a unified management platform.

How does Agenta facilitate collaboration with non-technical team members?

Agenta features a purpose-built, intuitive web interface that allows product managers, subject matter experts, and other non-coders to participate directly in the LLM development process. They can edit prompts in a safe playground environment, configure and view evaluation results, and provide feedback on production traces without needing to understand the underlying code or infrastructure.

What types of evaluations can I run on the platform?

Agenta supports a versatile array of evaluation strategies. You can employ LLM-as-a-judge setups for nuanced, criteria-based scoring, use built-in evaluators for metrics like correctness or toxicity, or integrate your own custom Python code for domain-specific assessments. The platform also facilitates human-in-the-loop evaluations, allowing you to incorporate qualitative feedback from experts directly into your evaluation workflow.

How does the observability feature help with production issues?

Agenta's observability traces every user request through your entire LLM application. When an error or suboptimal output occurs, you can drill down into the exact trace to see the full input, the LLM calls, the intermediate steps, and the final output. This eliminates guesswork. You can then annotate the trace with your team and, most powerfully, instantly save it as a test case to ensure the issue is caught and fixed permanently.

Pricing of Agenta

Agenta is an open-source platform, and its core codebase is freely available for download, self-hosting, and modification under its open-source license. This allows teams of all sizes to use and benefit from its full suite of features without initial cost. For organizations seeking a managed, hosted solution with additional enterprise-grade features, support, and scalability, Agenta offers commercial plans. Detailed information on these premium tiers, including specific features and pricing, is available on the Agenta website under the "Pricing" section. You can also contact the team directly to "Book a demo" for a personalized overview.

You may also like:

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs